(Part 3)

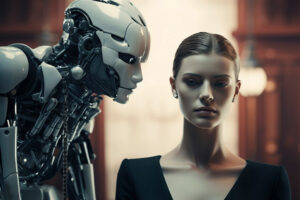

The case of the US youth who committed suicide abetted by a chatbot that was so human-like that the adolescent literally romantically fell in love with it illustrates how important it is to regulate the AI industry to prevent abuses of this otherwise very beneficial technology. But we cannot afford to throw the baby with the bath water. Before we start demanding stronger and stronger state control, considering the principle of subsidiarity, we have to find ways that the private sector — both business and civil society — can regulate the profit-making activities of AI enterprises.

In fairness to Character.AI that developed the bot used by the hapless youth, in response to the tragic event, this technology enterprise immediately adopted safety measures to prevent a similar situation happening in the future. As one example of responsible leadership in the age of AI, let us describe in detail the response of Character.AI.

First, it declared what its business mission is, then how it was responding to the case: Our goal is to offer the fun and engaging experience our users have come to expect while enabling the safe exploration of the topics our users want to discuss with Characters. Our policies do not allow non-consensual sexual content, graphic or specific descriptions of sexual acts, or the promotion or depiction of self-harm or suicide. We are continually training the large language model (LLM) that powers the Characters on the platform to adhere to these policies. As a specific response to the tragic case of suicide, Character.AI management started investing heavily in its trust and safety processes and internal team. Being a relatively new company, it hired a Head of Trust and Safety and a Head of Content Policy. In addition, engineering safety support team members were employed. The firm plans to continue growing and evolving in this area of safety. Management recently put in place a pop-up resource that is triggered when a user inputs certain phrases related to self-harm or suicide, directing the user to the National Suicide Prevention Lifeline.

Worthy of emulation by similar AI enterprises are new features that Character.AI intends to roll out. These are new safety and product features that will strengthen the security of its platform without compromising the entertaining and engaging experience users have come to expect from the company. Among these features are:

• Changes to its models for minors (under the age of 18) that are designed to reduce the likelihood of encountering sensitive or suggestive content.

• Improved detection, response, and intervention related to user inputs that violate the firm’s Terms of Community Guidelines.

• A revised disclaimer on every Chat to remind users that AI is not a real person.

• Notification when a user has spent an hour on the platform, with additional user flexibility in progress.

Character.AI is also now engaging in proactive detection and the moderation of user-created Characters, including using industry standard and custom blocklists that are regularly updated. Proactively and in response to user reports, the enterprise removes Characters that violate the Terms of Service. It also adheres to Digital Millennium Copyright Act (DMCA) requirements and takes swift action to remove reported Characters that violate copyright law or its own policies. Users may notice that the company recently removed a group of Characters that have been flagged as violative, and these will be added to the customer blocklists in the future. This means users also won’t have access to their chat history with the Characters in question.

In general, Character.AI is committed to implementing enhanced safety systems to better protect all users, particularly young ones, including the following:

• Enhanced Guardrails: Limiting access to content for people under 14.

• Session alerts: Notifying users who spend more than an hour interacting with the chatbots.

• Suicide Prevention Features: Pop-ups directing individuals to suicide prevention hotlines when certain red flag phrases are detected.

These measures, implemented by a leading participant in the AI industry, are a good beginning to address the ethical challenges of the ongoing industrial revolution. Obviously, they are not sufficient to truly protect the millions of users of Character.AI, who include not only minors but also people with mental diseases and other individuals facing psychological stress. This question concerns not only Character.AI’s policies but also those of the hundreds of companies that introduce chatbots and other AI beings into the virtual world day in and day out.

Again, following the principle of subsidiarity, the consuming public must be ever vigilant to give feedback to the producers of AI and, if necessary, file a lawsuit against the irresponsible use of the technology, as the mother of the teenager who committed suicide did against Character.AI.

Bernardo M. Villegas has a Ph.D. in Economics from Harvard, is professor emeritus at the University of Asia and the Pacific, and a visiting professor at the IESE Business School in Barcelona, Spain. He was a member of the 1986 Constitutional Commission.

bernardo.villegas@uap.asia